Cognitive load scale in learning formal definition of limit: A rasch model approach

##plugins.themes.bootstrap3.article.main##

Abstract

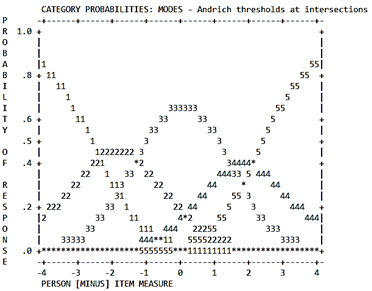

Constructing proofs for the limit using the formal definition induces a high cognitive load. Common assessment tools, like cognitive load scales, lack specificity for the concept of limits. This research aims to validate an instrument tailored to assess cognitive load in students focused on the formal definition of limits, addressing the need for diverse strategies in education. The research employs a quantitative survey design with a Rasch model approach, utilizing a data collection instrument in the form of a questionnaire. Subsequently, the data are analyzed by focusing on three aspects: (1) item fit to the Rasch model, (2) unidimensionality, and (3) rating scale. A total of 315 students from three private universities in Banten participated as research respondents. The findings of this study affirm the validity of the cognitive load scale centered on the formal definition of limit, meeting the stringent standards set by Rasch modeling. Additionally, the results of the study provide evidence of the scale’s adherence to the monotonic principle of the Rasch model. These outcomes contribute to a comprehensive understanding of cognitive load in the context of learning formal definition of limit, providing a solid foundation for instructional design and assessment strategies.

##plugins.themes.bootstrap3.article.details##

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

The author is responsible for acquiring the permission(s) to reproduce any copyrighted figures, tables, data, or text that are being used in the submitted paper. Authors should note that text quotations of more than 250 words from a published or copyrighted work will require grant of permission from the original publisher to reprint. The written permission letter(s) must be submitted together with the manuscript.References

Adiredja, A. P. (2021). Students’ struggles with temporal order in the limit definition: uncovering resources using knowledge in pieces. International Journal of Mathematical Education in Science and Technology, 52(9), 1295-1321. https://doi.org/10.1080/0020739X.2020.1754477

Aghekyan, R. (2020). Validation of the SIEVEA instrument using the rasch analysis. International Journal of Educational Research, 103, 101619. https://doi.org/10.1016/j.ijer.2020.101619

Al Ali, R., & Shehab, R. T. (2020). Psychometric properties of social perception of mathematics: Rasch model analysis. International Education Studies, 13(12), 102-110. https://doi.org/10.5539/ies.v13n12p102

Alam, A. (2020). Challenges and possibilities in teaching and learning of calculus: A case study of India. Journal for the Education of Gifted Young Scientists, 8(1), 407-433. https://doi.org/10.17478/jegys.660201

Andrich, D. (2013). An expanded derivation of the threshold structure of the polytomous rasch model that dispels any “threshold disorder controversy”. Educational and Psychological Measurement, 73(1), 78-124. https://doi.org/10.1177/0013164412450877

Andrich, D., & Pedler, P. (2019). A law of ordinal random error: The rasch measurement model and random error distributions of ordinal assessments. Measurement, 131, 771-781. https://doi.org/10.1016/j.measurement.2018.08.062

Anmarkrud, Ø., Andresen, A., & Bråten, I. (2019). Cognitive load and working memory in multimedia learning: conceptual and measurement issues. Educational Psychologist, 54(2), 61-83. https://doi.org/10.1080/00461520.2018.1554484

Arzarello, F., & Soldano, C. (2019). Approaching proof in the classroom through the logic of inquiry. In G. Kaiser & N. Presmeg (Eds.), Compendium for Early Career Researchers in Mathematics Education (pp. 221-243). Springer International Publishing. https://doi.org/10.1007/978-3-030-15636-7_10

Bishara, S. (2022). Linking cognitive load, mindfulness, and self-efficacy in college students with and without learning disabilities. European Journal of Special Needs Education, 37(3), 494-510. https://doi.org/10.1080/08856257.2021.1911521

Bond, T. G., & Fox, C. M. (2007). Applying the Rasch model: Fundamental measurement in the human sciences. Psychology Press. https://doi.org/10.4324/9781410614575

Boone, W. J., & Staver, J. R. (2020). Understanding and utilizing item characteristic curves (ICC) to further evaluate the functioning of a scale. In W. J. Boone & J. R. Staver (Eds.), Advances in Rasch Analyses in the Human Sciences (pp. 65-83). Springer International Publishing. https://doi.org/10.1007/978-3-030-43420-5_6

Breves, P., & Stein, J.-P. (2023). Cognitive load in immersive media settings: the role of spatial presence and cybersickness. Virtual Reality, 27(2), 1077-1089. https://doi.org/10.1007/s10055-022-00697-5

Brown, J. R. (2022). Rigour and thought experiments: Burgess and norton. Axiomathes, 32(1), 7-28. https://doi.org/10.1007/s10516-021-09567-2

Brzezińska, J. (2020). Item response theory models in the measurement theory. Communications in Statistics - Simulation and Computation, 49(12), 3299-3313. https://doi.org/10.1080/03610918.2018.1546399

Can, Y. S., Arnrich, B., & Ersoy, C. (2019). Stress detection in daily life scenarios using smart phones and wearable sensors: A survey. Journal of Biomedical Informatics, 92, 103139. https://doi.org/10.1016/j.jbi.2019.103139

Casale, G., Herzog, M., & Volpe, R. J. (2023). Measurement efficiency of a teacher rating scale to screen for students at risk for social, emotional, and behavioral problems. Journal of Intelligence, 11(3), 57. https://doi.org/10.3390/jintelligence11030057

Case, J., & Speer, N. (2021). Calculus students’ deductive reasoning and strategies when working with abstract propositions and calculus theorems. PRIMUS, 31(2), 184-201. https://doi.org/10.1080/10511970.2019.1660931

Chan, S.-W., Looi, C.-K., & Sumintono, B. (2021). Assessing computational thinking abilities among Singapore secondary students: a rasch model measurement analysis. Journal of Computers in Education, 8(2), 213-236. https://doi.org/10.1007/s40692-020-00177-2

Chen, C., Kang, J. M., Sonnert, G., & Sadler, P. M. (2021). High school calculus and computer science course taking as predictors of success in introductory college computer science. ACM Trans. Comput. Educ., 21(1), Article 6. https://doi.org/10.1145/3433169

Chew, S. L., & Cerbin, W. J. (2021). The cognitive challenges of effective teaching. The Journal of Economic Education, 52(1), 17-40. https://doi.org/10.1080/00220485.2020.1845266

Chi, S., Liu, X., & Wang, Z. (2021). Comparing student science performance between hands-on and traditional item types: A many-facet Rasch analysis. Studies in Educational Evaluation, 70, 100998. https://doi.org/10.1016/j.stueduc.2021.100998

Chi, S., Wang, Z., & Zhu, Y. (2023). Using rasch analysis to assess students’ learning progression in stability and change across middle school grades. In X. Liu & W. J. Boone (Eds.), Advances in Applications of Rasch Measurement in Science Education (pp. 265-289). Springer International Publishing. https://doi.org/10.1007/978-3-031-28776-3_11

Chung, S., & Cai, L. (2021). Cross-classified random effects modeling for moderated item calibration. Journal of Educational and Behavioral Statistics, 46(6), 651-681. https://doi.org/10.3102/1076998620983908

De Ayala, R. J. (2018). Item response theory and Rasch modeling. In G. R. Hancock, L. M. Stapleton, & R. O. Mueller (Eds.), The reviewer’s guide to quantitative methods in the social sciences (2nd ed., pp. 145-163). Routledge. https://doi.org/10.4324/9781315755649

Eckes, T., & Jin, K.-Y. (2021). Measuring rater centrality effects in writing assessment: A bayesian facets modeling approach. Psychological Test and Assessment Modeling, 63(1), 65-94.

Falotico, R., & Quatto, P. (2015). Fleiss’ kappa statistic without paradoxes. Quality & Quantity, 49(2), 463-470. https://doi.org/10.1007/s11135-014-0003-1

Faradillah, A., & Febriani, L. (2021). Mathematical trauma students' junior high school based on grade and gender. Infinity Journal, 10(1), 53-68. https://doi.org/10.22460/infinity.v10i1.p53-68

Fennell, F., & Rowan, T. (2001). Representation: An important process for teaching and learning mathematics. Teaching Children Mathematics, 7(5), 288-292. https://doi.org/10.5951/tcm.7.5.0288

Forsberg, A., Adams, E. J., & Cowan, N. (2021). Chapter one - The role of working memory in long-term learning: Implications for childhood development. In K. D. Federmeier (Ed.), Psychology of Learning and Motivation (Vol. 74, pp. 1-45). Academic Press. https://doi.org/10.1016/bs.plm.2021.02.001

Ghedamsi, I., & Lecorre, T. (2021). Transition from high school to university calculus: a study of connection. ZDM – Mathematics Education, 53(3), 563-575. https://doi.org/10.1007/s11858-021-01262-1

Gupta, U., & Zheng, R. Z. (2020). Cognitive load in solving mathematics problems: Validating the role of motivation and the interaction among prior knowledge, worked examples, and task difficulty. European Journal of STEM Education, 5(1), 5. https://doi.org/10.20897/ejsteme/9252

Gwet, K. L. (2021). Large-sample variance of fleiss generalized kappa. Educational and Psychological Measurement, 81(4), 781-790. https://doi.org/10.1177/0013164420973080

Hadie, S. N. H., & Yusoff, M. S. B. (2016). Assessing the validity of the cognitive load scale in a problem-based learning setting. Journal of Taibah University Medical Sciences, 11(3), 194-202. https://doi.org/10.1016/j.jtumed.2016.04.001

Hadžibajramović, E., Schaufeli, W., & De Witte, H. (2020). A rasch analysis of the burnout assessment Tool (BAT). PLoS One, 15(11), e0242241. https://doi.org/10.1371/journal.pone.0242241

Hagell, P. (2019). Measuring activities of daily living in Parkinson’s disease: On a road to nowhere and back again? Measurement, 132, 109-124. https://doi.org/10.1016/j.measurement.2018.09.050

Ho, S. Y., Phua, K., Wong, L., & Goh, W. W. B. (2020). Extensions of the external validation for checking learned model interpretability and generalizability. Patterns, 1(8). https://doi.org/10.1016/j.patter.2020.100129

Hoijtink, H. (2005). Item response models for nonmonotone items. In K. Kempf-Leonard (Ed.), Encyclopedia of Social Measurement (pp. 373-378). Elsevier. https://doi.org/10.1016/B0-12-369398-5/00464-3

Huckaby, L. V., Cyr, A. R., Handzel, R. M., Littleton, E. B., Crist, L. R., Luketich, J. D., Lee, K. K., & Dhupar, R. (2022). Postprocedural cognitive load measurement with immediate feedback to guide curriculum development. The Annals of Thoracic Surgery, 113(4), 1370-1377. https://doi.org/10.1016/j.athoracsur.2021.05.086

Indihadi, D., Suryana, D., & Ahmad, A. B. (2022). The analysis of construct validity of Indonesian creativity scale using rasch model. Creativity Studies, 15(2), 560–576. https://doi.org/10.3846/cs.2022.15182

Jablonka, E. (2020). Critical thinking in mathematics education. In S. Lerman (Ed.), Encyclopedia of mathematics education (pp. 159-163). Springer International Publishing. https://doi.org/10.1007/978-3-030-15789-0_35

Jiang, D., & Kalyuga, S. (2020). Confirmatory factor analysis of cognitive load ratings supports a two-factor model. The Quantitative Methods for Psychology, 16(3), 216-225. https://doi.org/10.20982/tqmp.16.3.p216

Johnson, J. L., Adkins, D., & Chauvin, S. (2020). A review of the quality indicators of rigor in qualitative research. American Journal of Pharmaceutical Education, 84(1), 7120. https://doi.org/10.5688/ajpe7120

Josa, I., & Aguado, A. (2020). Measuring unidimensional inequality: Practical framework for the choice of an appropriate measure. Social Indicators Research, 149(2), 541-570. https://doi.org/10.1007/s11205-020-02268-0

Katona, J. (2022). Measuring cognition load using eye-tracking parameters based on algorithm description tools. Sensors, 22(3), 912. https://doi.org/10.3390/s22030912

Kidron, I. (2020). Calculus teaching and learning. In S. Lerman (Ed.), Encyclopedia of mathematics education (pp. 87-94). Springer International Publishing. https://doi.org/10.1007/978-3-030-15789-0_18

Klepsch, M., Schmitz, F., & Seufert, T. (2017). Development and validation of two instruments measuring intrinsic, extraneous, and germane cognitive load. Frontiers in psychology, 8, 1997. https://doi.org/10.3389/fpsyg.2017.01997

Klepsch, M., & Seufert, T. (2020). Understanding instructional design effects by differentiated measurement of intrinsic, extraneous, and germane cognitive load. Instructional Science, 48(1), 45-77. https://doi.org/10.1007/s11251-020-09502-9

Koskey, K. L., Mudrey, R. R., & Ahmed, W. (2017). Rasch derived teachers' emotions questionnaire. Journal of Applied Measurement, 18(1), 67-86.

Krieglstein, F., Beege, M., Rey, G. D., Sanchez-Stockhammer, C., & Schneider, S. (2023). Development and validation of a theory-based questionnaire to measure different types of cognitive load. Educational Psychology Review, 35(1), 9. https://doi.org/10.1007/s10648-023-09738-0

Lakens, D. (2022). Sample size justification. Collabra: Psychology, 8(1), 33267. https://doi.org/10.1525/collabra.33267

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159-174. https://doi.org/10.2307/2529310

Leppink, J., Paas, F., Van der Vleuten, C. P. M., Van Gog, T., & Van Merriënboer, J. J. G. (2013). Development of an instrument for measuring different types of cognitive load. Behavior Research Methods, 45(4), 1058-1072. https://doi.org/10.3758/s13428-013-0334-1

Ludyga, S., Gerber, M., & Kamijo, K. (2022). Exercise types and working memory components during development. Trends in Cognitive Sciences, 26(3), 191-203. https://doi.org/10.1016/j.tics.2021.12.004

Mangaroska, K., Sharma, K., Gašević, D., & Giannakos, M. (2022). Exploring students' cognitive and affective states during problem solving through multimodal data: Lessons learned from a programming activity. Journal of Computer Assisted Learning, 38(1), 40-59. https://doi.org/10.1111/jcal.12590

Martínez-Planell, R., & Trigueros, M. (2021). Multivariable calculus results in different countries. ZDM – Mathematics Education, 53(3), 695-707. https://doi.org/10.1007/s11858-021-01233-6

Mutiawani, V., Athaya, A. M., Saputra, K., & Subianto, M. (2022). Implementing item response theory (IRT) method in quiz assessment system. TEM Journal, 11(1), 210-218. https://doi.org/10.18421/TEM111-26

Naar, S., Chapman, J., Cunningham, P. B., Ellis, D., MacDonell, K., & Todd, L. (2021). Development of the motivational interviewing coach rating scale (MI-CRS) for health equity implementation contexts. Health Psychology, 40(7), 439-449. https://doi.org/10.1037/hea0001064

Nima, A. A., Cloninger, K. M., Persson, B. N., Sikström, S., & Garcia, D. (2020). Validation of subjective well-being measures using item response theory. Frontiers in psychology, 10, 3036. https://doi.org/10.3389/fpsyg.2019.03036

Oktaviyanthi, R., & Agus, R. N. (2023). Evaluating graphing quadratic worksheet on visual thinking classification: A confirmatory analysis. Infinity Journal, 12(2), 207-224. https://doi.org/10.22460/infinity.v12i2.p207-224

Oktaviyanthi, R., Herman, T., & Dahlan, J. A. (2018). How does pre-service mathematics teacher prove the limit of a function by formal definition? Journal on Mathematics Education, 9(2), 195-212. https://doi.org/10.22342/jme.9.2.5684.195-212

Ouwehand, K., Kroef, A. v. d., Wong, J., & Paas, F. (2021). Measuring cognitive load: Are there more valid alternatives to Likert rating scales? Frontiers in Education, 6, 702616. https://doi.org/10.3389/feduc.2021.702616

Parr, E. D. (2023). Undergraduate students’ interpretations of expressions from calculus statements within the graphical register. Mathematical thinking and learning, 25(2), 177-207. https://doi.org/10.1080/10986065.2021.1943608

Pradipta, T. R., Perbowo, K. S., Nafis, A., Miatun, A., & Johnston-Wilder, S. (2021). Marginal region mathematics teachers' perception of using ict media. Infinity Journal, 10(1), 133-148. https://doi.org/10.22460/infinity.v10i1.p133-148

Qu, Y., Kne, L., Graham, S., Watkins, E., & Morris, K. (2023). A latent scale model to minimize subjectivity in the analysis of visual rating data for the National Turfgrass Evaluation Program. Frontiers in Plant Science, 14. https://doi.org/10.3389/fpls.2023.1135918

Quarfoot, D., & Rabin, J. M. (2022). A hypothesis framework for students’ difficulties with proof by contradiction. International Journal of Research in Undergraduate Mathematics Education, 8(3), 490-520. https://doi.org/10.1007/s40753-021-00150-z

Quintão, C., Andrade, P., & Almeida, F. (2020). How to improve the validity and reliability of a case study approach? Journal of Interdisciplinary Studies in Education, 9(2), 264-275. https://doi.org/10.32674/jise.v9i2.2026

Ramakrishnan, P., Balasingam, B., & Biondi, F. (2021). Chapter 2 - cognitive load estimation for adaptive human–machine system automation. In D. Zhang & B. Wei (Eds.), Learning Control (pp. 35-58). Elsevier. https://doi.org/10.1016/B978-0-12-822314-7.00007-9

Ramesh, D., & Sanampudi, S. K. (2022). An automated essay scoring systems: a systematic literature review. Artificial Intelligence Review, 55(3), 2495-2527. https://doi.org/10.1007/s10462-021-10068-2

Sepp, S., Howard, S. J., Tindall-Ford, S., Agostinho, S., & Paas, F. (2019). Cognitive load theory and human movement: Towards an integrated model of working memory. Educational Psychology Review, 31(2), 293-317. https://doi.org/10.1007/s10648-019-09461-9

Shi, Q., Wind, S. A., & Lakin, J. M. (2023). Exploring the influence of item characteristics in a spatial reasoning task. Journal of Intelligence, 11(8), 152. https://doi.org/10.3390/jintelligence11080152

Silvia, P. J., Rodriguez, R. M., Beaty, R. E., Frith, E., Kaufman, J. C., Loprinzi, P., & Reiter-Palmon, R. (2021). Measuring everyday creativity: A rasch model analysis of the biographical inventory of creative behaviors (BICB) scale. Thinking Skills and Creativity, 39, 100797. https://doi.org/10.1016/j.tsc.2021.100797

Skulmowski, A. (2023). Guidelines for choosing cognitive load measures in perceptually rich environments. Mind, Brain, and Education, 17(1), 20-28. https://doi.org/10.1111/mbe.12342

Skulmowski, A., & Xu, K. M. (2022). Understanding cognitive load in digital and online learning: A new perspective on extraneous cognitive load. Educational Psychology Review, 34(1), 171-196. https://doi.org/10.1007/s10648-021-09624-7

Slavíčková, M., & Vargová, M. (2023). Differences in the comprehension of the limit concept between prospective mathematics teachers and managerial mathematicians during online teaching. In 4th International Conference, Higher Education Learning Methodologies and Technologies Online (pp. 168-183). Cham https://doi.org/10.1007/978-3-031-29800-4_13

Stenner, A. J., Fisher, W. P., Stone, M. H., & Burdick, D. (2023). Causal rasch models. In W. P. Fisher Jr & P. J. Massengill (Eds.), Explanatory models, unit standards, and personalized learning in educational measurement (pp. 223-250). Springer Nature Singapore. https://doi.org/10.1007/978-981-19-3747-7_18

Swain, T. A., Snyder, S. W., McGwin, G., Huisingh, C. E., Seder, T., & Owsley, C. (2023). Older drivers’ attitudes and preferences about instrument cluster designs in vehicles revealed by the dashboard questionnaire. Cognition, Technology & Work, 25(1), 65-74. https://doi.org/10.1007/s10111-022-00710-6

Sweller, J. (2011). Chapter two - Cognitive load theory. In J. P. Mestre & B. H. Ross (Eds.), Psychology of learning and motivation (Vol. 55, pp. 37-76). Academic Press. https://doi.org/10.1016/B978-0-12-387691-1.00002-8

Szulewski, A., Howes, D., van Merriënboer, J. J. G., & Sweller, J. (2021). From theory to practice: The application of cognitive load theory to the practice of medicine. Academic Medicine, 96(1), 24-30. https://doi.org/10.1097/acm.0000000000003524

Tesio, L., Caronni, A., Kumbhare, D., & Scarano, S. (2023). Interpreting results from rasch analysis 1. The “most likely” measures coming from the model. Disability and Rehabilitation, 1-13. https://doi.org/10.1080/09638288.2023.2169771

Thompson, P. W., & Harel, G. (2021). Ideas foundational to calculus learning and their links to students’ difficulties. ZDM – Mathematics Education, 53(3), 507-519. https://doi.org/10.1007/s11858-021-01270-1

Toland, M. D., Li, C., Kodet, J., & Reese, R. J. (2021). Psychometric properties of the outcome rating scale: An item response theory analysis. Measurement and Evaluation in Counseling and Development, 54(2), 90-105. https://doi.org/10.1080/07481756.2020.1745647

Viirman, O., Vivier, L., & Monaghan, J. (2022). The limit notion at three educational levels in three countries. International Journal of Research in Undergraduate Mathematics Education, 8(2), 222-244. https://doi.org/10.1007/s40753-022-00181-0

Wang, M.-T., Degol, J. L., Amemiya, J., Parr, A., & Guo, J. (2020). Classroom climate and children’s academic and psychological wellbeing: A systematic review and meta-analysis. Developmental Review, 57, 100912. https://doi.org/10.1016/j.dr.2020.100912

Wu, M., Tam, H. P., & Jen, T.-H. (2016). Two-parameter IRT models. In M. Wu, H. P. Tam, & T.-H. Jen (Eds.), Educational measurement for applied researchers: Theory into practice (pp. 187-205). Springer Singapore. https://doi.org/10.1007/978-981-10-3302-5_10

Yamashita, T. (2022). Analyzing likert scale surveys with rasch models. Research Methods in Applied Linguistics, 1(3), 100022. https://doi.org/10.1016/j.rmal.2022.100022

Yan, X., Marmur, O., & Zazkis, R. (2020). Calculus for teachers: Perspectives and considerations of mathematicians. Canadian Journal of Science, Mathematics and Technology Education, 20(2), 355-374. https://doi.org/10.1007/s42330-020-00090-x